A Dockerfile defines exactly how a container image is assembled, layered, and configured. As containerized applications scale across multi-platform environments, the structure of that file directly affects build speed, security boundaries, cache behaviour, and long-term maintainability. By 2026, teams building for mixed architectures, stricter compliance requirements, and larger CI pipelines will rely heavily on well-designed Dockerfiles to keep images predictable and efficient.

Most build issues, unnecessary image bloat, and security gaps originate not from Docker itself, but from Dockerfiles written without attention to layer order, context size, or runtime constraints. This guide focuses on how Dockerfiles actually work, how they should be constructed, and the engineering practices that produce fast, secure, portable images without adding operational overhead.

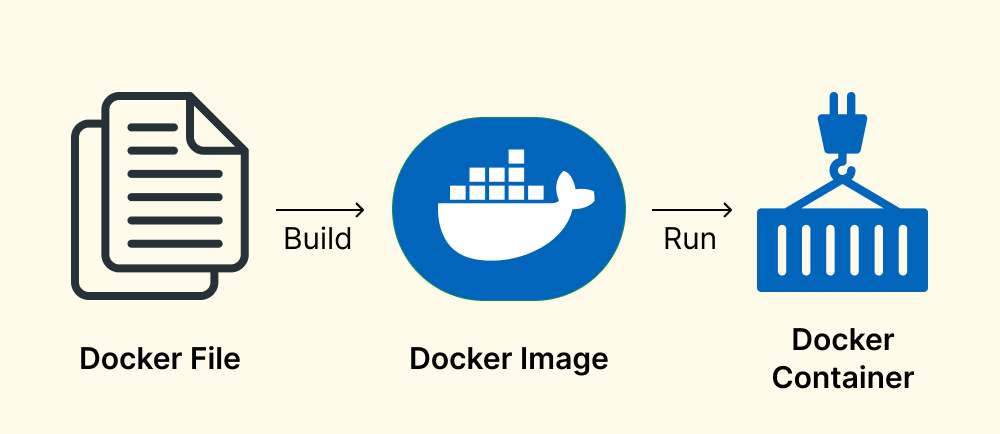

What Is a Dockerfile?

A Dockerfile defines how an image is built layer by layer, and its structure dictates caching efficiency, build determinism, and the predictability of the final runtime environment. Before addressing workflow or best practices, the underlying mechanics must be clearly understood.

Minimal Dockerfile ExampleFROM python:3.11-slim WORKDIR /app COPY app.py . CMD [“python”, “app.py”] |

How a Dockerfile Operates Internally

A Dockerfile is processed sequentially, with each instruction producing an immutable intermediate layer. These layers are cached and reused when possible, meaning small changes in the wrong place can invalidate an entire build. Modern Docker builds use BuildKit, enabling parallelism, improved caching, and more secure handling of SSH keys, secrets, and mounts.

Enable BuildKitexport DOCKER_BUILDKIT=1 |

Build using BuildKit

|

Multi-platform Build with Buildx

|

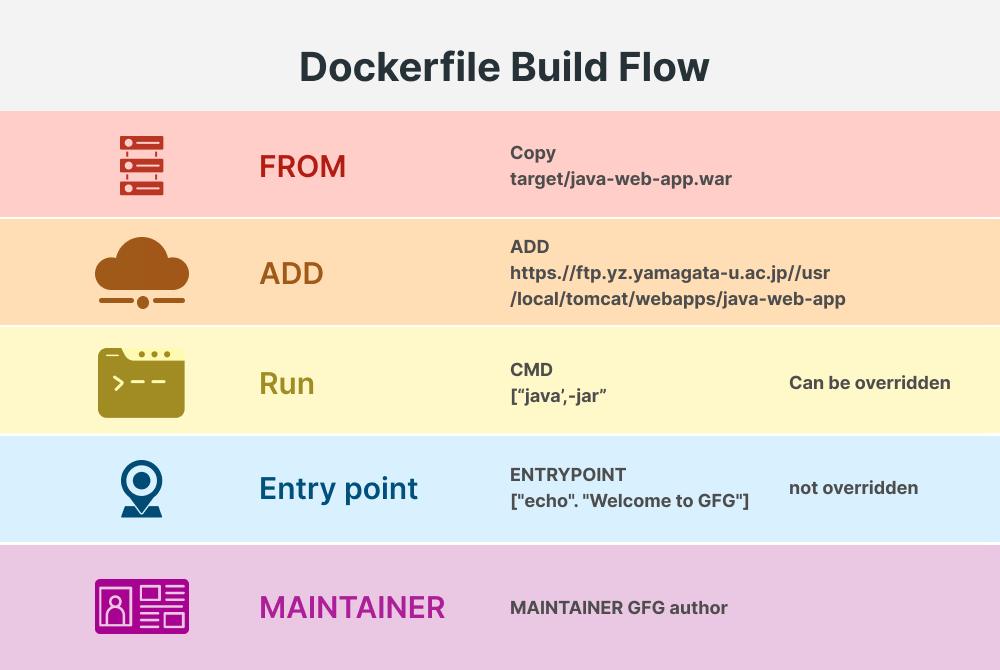

Core Instructions Engineers Work With

The essential instructions (FROM, RUN, COPY, WORKDIR, ENV, ARG, ENTRYPOINT, CMD, USER) define how dependencies are installed, how the runtime process is launched, and how environment configuration is applied. Their placement affects everything from image size to cache reuse to non-root execution at runtime.

Small Dockerfile Showing Major DirectivesFROM node:20-alpine # Build-time variables ARG NODE_ENV=production # Environment variables ENV PORT=3000 # Set working dir WORKDIR /app # Copy dependencies separately for caching COPY package*.json ./ RUN npm install –production # Copy application code COPY . . # Define non-root user USER node # Metadata LABEL maintainer=”team@example.com” # Container start command CMD [“node”, “server.js”] |

Constructing a Dockerfile: A Precise Build Workflow

Building a Dockerfile is not just arranging instructions; it’s defining how the build engine interprets context, caches layers, and isolates runtime behavior. The workflow below reflects how Dockerfiles are engineered in real CI/CD environments, not in classroom examples.

1. Preparing Build Context and .dockerignore

The build context defines the files sent to the Docker daemon or BuildKit engine. A noisy or oversized context slows builds and invalidates cache layers unnecessarily. Using a well-maintained .dockerignore ensures that logs, temp directories, secrets, and source artifacts stay out of the image and out of the build cache.

dockerignore examplenode_modules .git *.log dist/ .env __pycache__/ |

2. Selecting an Appropriate Base Image

Base images determine security posture, final image size, and build speed. Minimal images (Alpine, distroless, Ubuntu-minimal) reduce the attack surface but require careful dependency handling, especially across multi-architecture builds. Vendor-signed images and pinned versions have become baseline requirements in 2026 for auditability and reproducibility.

3. Structuring Instructions for Stable Caching

Instruction order directly affects cache reuse. Placing high-change commands (like copying application code) too early forces unnecessary rebuilds of heavy layers. Consolidating related RUN steps reduces the number of layers while still keeping the Dockerfile readable. Using ARG wisely avoids invalidating layers that don’t need to change on every build.

Correct build commands# Standard build with BuildKit docker build -t myapp:dev . # Build with explicit file and context docker build -f Dockerfile -t myapp:v1 ./src # Tagged, versioned, reproducible build docker build -t myapp:1.3.0 –build-arg NODE_ENV=production. |

4. Building and Validating the Image

Modern builds in 2026 rely on BuildKit or Buildx to handle parallel execution, multi-platform builds, and advanced caching. After building, validation involves running smoke tests, verifying non-root execution, checking metadata (LABEL), and confirming that image size aligns with baseline thresholds. Only then is the image tagged and pushed using a versioning strategy that avoids floating tags in CI pipelines.

Advanced Dockerfile Practices (2026 Standard)

Modern Dockerfiles in 2026 must address more than functionality. Build performance, image granularity, reproducibility, and security controls all depend on how instructions are structured. These practices reflect how high-performing teams design Dockerfiles for long-term use in production pipelines.

Multi-Stage Builds Done Properly

Multi-stage builds reduce final image size and isolate toolchains, but only when implemented with discipline. Builder stages should include compilers, package managers, and test tools, while the final stage contains only the runtime essentials. Sensitive files, build caches, and temporary assets must never leak into the final image. Proper stage naming improves readability and reduces onboarding friction.

Builder + Runtime Example# —- Builder stage —- FROM golang:1.22 AS builder WORKDIR /src COPY . . RUN go build -o app # —- Runtime stage —- FROM gcr.io/distroless/base WORKDIR /app COPY –from=builder /src/app . USER 1000 ENTRYPOINT [“./app”] |

Security-Focused Engineering

Security controls begin inside the Dockerfile. Images should enforce non-root execution with a defined UID/GID rather than relying on defaults. BuildKit’s secret mounts prevent credentials from being baked into layers. Signing images, pinning base image versions, using reproducible builds, and applying a HEALTHCHECK all contribute to predictable runtime behavior. Every instruction should assume scrutiny during audits.

Non-root + HEALTHCHECK + metadataFROM ubuntu:22.04 RUN useradd -u 1001 appuser WORKDIR /app COPY run.sh . USER 1001 HEALTHCHECK –interval=30s –timeout=3s \ CMD [“bash”, “-c”, “pgrep app || exit 1”] CMD [“./run.sh”] |

Reducing Image Size Without Breaking Functionality

Minimal images reduce attack surface but require disciplined dependency management. Use package managers in non-interactive mode, clear caches in the same layer, and avoid installing tools that never execute at runtime. Distroless or slim-based images work well for statically compiled binaries, while Alpine may introduce compatibility issues depending on musl vs glibc. Each choice should be deliberate, not habitual.

Operational Metadata and Maintainability

A maintainable Dockerfile includes more than clean syntax. Labels define ownership, versioning, source URLs, and automated metadata used by scanners or deployment tools. Base images must be pinned with explicit versions to prevent broken builds caused by upstream changes. Teams should lint Dockerfiles using tools like Hadolint and maintain consistent formatting conventions to reduce cognitive overhead.

Handling Multi-Platform Builds (amd64, arm64, GPU)

Multi-architecture builds are now standard, not optional. Buildx allows simultaneous creation of amd64 and arm64 images, but each platform may require separate dependency handling or optimization flags. For GPU workloads, CUDA-enabled base images require stricter version pinning and validation. Proper platform declaration prevents runtime mismatches and reduces registry overhead.

Common Dockerfile Anti-Patterns Engineers Still Make

Even experienced teams introduce subtle mistakes that inflate images, break caching, or open security gaps. These aren’t beginner errors, they’re the recurring issues found during deep pipeline audits and post-incident reviews. Eliminating them produces more predictable, faster, and safer builds.

Cache-Busting COPY Instructions

Placing COPY . . or other frequently changing application files early in the Dockerfile forces the rebuild of every subsequent layer. This destroys caching and slows down CI pipelines. COPY operations should appear only after dependency installation layers so that unchanged dependencies are reused across builds.

Cache-busting COPYBad# BAD — copies everything early COPY . . RUN pip install -r requirements.txt Good # GOOD — installs dependencies first COPY requirements.txt . RUN pip install -r requirements.txt COPY . . |

Using ADD When COPY Is Enough

ADD introduces behaviors such as automatic archive extraction and remote URL fetching, both of which can lead to security gaps or unintended modifications of the build context. COPY is explicit and predictable, making it the correct default unless ADD’s additional functionality is truly required.

Bad: Using ADD unnecessarilyADD app.tar.gz /app/ Good: COPYCOPY app.tar.gz /tmp/ RUN tar -xzf /tmp/app.tar.gz -C /app |

Installing Unnecessary Toolchains or Leaving Them in Final Images

Compilers, package managers, and debugging utilities should never remain in the final production image. This not only bloats the image, but expands the attack surface. These tools belong in a builder stage, and the final stage should contain only the runtime binary and required resources.

Bad: Tools left in final imageFROM python:3.11 RUN apt-get update && apt-get install -y gcc COPY . . CMD [“python”, “main.py”] Good: Multi-stageFROM python:3.11 AS builder RUN apt-get update && apt-get install -y gcc COPY . . RUN pip install –prefix=/install . FROM python:3.11-slim COPY –from=builder /install /usr/local COPY . . CMD [“python”, “main.py”] |

Embedding Secrets or Credentials in Layers

Developers occasionally inject environment variables or tokens during RUN instructions or copy credential files into the image. These secrets get baked into immutable layers and cannot be fully removed later. BuildKit secrets or CI-level secret injection must be used instead to avoid permanent exposure.

Bad: Embedding secretsENV API_KEY=abcd12345 Good: BuildKit secretRUN –mount=type=secret,id=api_key \ export API_KEY=$(cat /run/secrets/api_key) |

Running Containers as Root Without a Defined User Strategy

Leaving containers to run as root by default introduces unnecessary privilege elevation at runtime. Defining a dedicated non-root user through USER, with a clear UID/GID, prevents privilege misuse and aligns with runtime hardening requirements now standard in production clusters.

Bad: Running as root by defaultFROM node:20 WORKDIR /app COPY . . CMD [“node”, “server.js”] GoodFROM node:20 WORKDIR /app COPY . . USER node CMD [“node”, “server.js”] |

Integrating Dockerfiles into CI/CD and Team Workflows

A Dockerfile is only reliable when the surrounding pipeline supports deterministic builds, consistent caching, and controlled promotion. This section covers how Dockerfiles operate within real CI/CD systems and how teams prevent drift across environments.

CI Build Strategy Using BuildKit, Caching, and Parallelism

BuildKit has become the default build engine due to its ability to run instructions in parallel, securely mount secrets, and reuse cache layers across builds. CI systems should enable remote cache backends, so multiple runners reuse shared layers instead of rebuilding them from scratch. This reduces build time, stabilizes pipeline performance, and prevents noisy changes from invalidating unrelated layers.

Registry Hygiene and Promotion Strategy

Tagging discipline determines how reliably images move across dev, staging, and production environments. Floating tags like latest cause unpredictable deployments, while semantic or commit-based versioning provides full traceability. Promotion should involve copying or retagging validated images rather than rebuilding them to avoid divergence between environments.

Integrating Scanning, SBOMs, and Provenance Metadata

Modern registries and CI platforms generate vulnerability reports, SBOMs, and provenance metadata automatically. Dockerfiles should include labels that integrate cleanly with these systems so operational teams can track ownership, dependencies, and image origins. This transparency supports audits, compliance checks, and long-lived maintenance of production services.

Conclusion

A well-architected Dockerfile transforms build processes into reliable, secure, and efficient pipelines. In 2026, this means mastering multi-stage builds, cache discipline, non-root execution, and reproducible images, not treating the Dockerfile as an afterthought.

If you’re working in container engineering, DevOps automation, or platform architecture, structured training amplifies your impact. Invensis Learning offers the DevOps certification courses that map directly to the skills discussed in this guide.

Take your Dockerfile discipline into production-grade intensity, and then build the broader framework to support it through formal training.