Artificial intelligence is no longer an experimental add-on in risk management. It is a core driver reshaping how organizations anticipate and control uncertainty. Predictive analytics, anomaly detection, and decision-intelligence platforms are giving risk leaders real-time insight into threats that once took weeks to identify. Yet AI’s speed and power also introduce new vulnerabilities: model bias, governance gaps, and regulatory scrutiny can amplify risks if not managed well.

In this environment, technical awareness alone is not enough. Professionals require a disciplined framework for governance, stakeholder alignment, and ethical oversight. These qualities are embodied in the competencies defined by the Project Management Institute’s Risk Management Professional (PMI-RMP) certification. This article explores how AI is transforming risk management across industries, and why PMI-RMP skills are essential for leveraging AI responsibly and strategically.

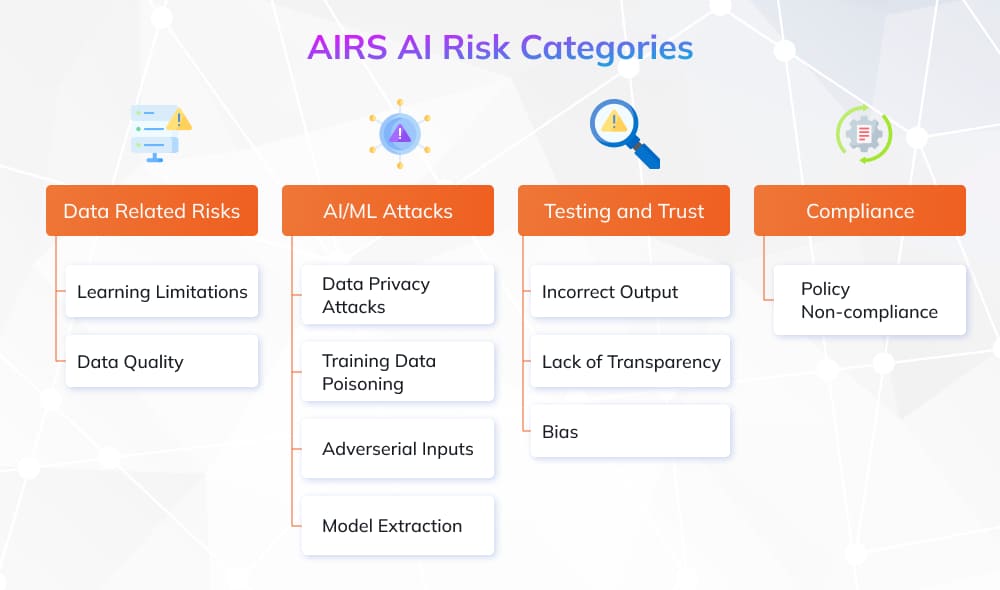

The Hidden Risk: AI Itself

While AI can prevent risks, it also introduces new ones. If not properly governed, these tools can undermine trust and even destabilize entire systems.

The Bank for International Settlements (BIS) has warned that poorly governed AI models in finance could trigger systemic risks, citing cases where biased algorithms led to discriminatory lending and subsequent regulatory fines. Similarly, regulators in both the U.S. and Europe are pushing for mandatory explainability in AI-driven decisions. Without these safeguards, AI can become a hidden liability rather than a source of resilience.

The risks include:

- Bias and fairness: AI systems learn from historical data, which may carry embedded social, economic, or demographic biases. If not corrected, these biases become “hard-coded” into the model. For example, biased loan approval algorithms have disproportionately rejected applications from minority groups, not because of true creditworthiness but because of skewed training data. In 2025, the World Bank highlighted a global bank fined millions for such discriminatory outcomes. These cases demonstrate how bias not only creates ethical concerns but also translates into regulatory penalties and reputational damage.

- Governance gaps: Many organizations race to deploy AI for competitive advantage but skip essential governance steps such as model validation, performance monitoring, and establishing accountability. The result can be anything from compliance failures to operational breakdowns.

- Lack of transparency: Some of the most advanced AI systems, including learning models, function as “black boxes.” They generate decisions, flagging a transaction as fraudulent or rejecting a loan, without offering a clear explanation of how they reached that conclusion. For risk managers, this is a significant challenge. Executives, auditors, and regulators require traceability to approve decisions, particularly in industries like banking, insurance, and healthcare.

This is where PMI-RMP-certified professionals shine. They apply risk governance principles to AI itself, establishing oversight frameworks, validating models at regular intervals, and documenting decision processes to maintain audit trails. Their ability to integrate governance, accountability, and ethical safeguards ensures that AI adoption strengthens risk management, rather than introducing new vulnerabilities.

How AI Is Transforming Risk Management? The Future

Artificial intelligence is now central to every phase of risk management, fundamentally changing how risks are forecasted, monitored, analyzed, and governed. The following areas illustrate its transformative impact and show how PMI-RMP competencies guide responsible adoption.

Predictive Analytics and Machine Learning: From Reactive to Proactive

Artificial intelligence is enabling organizations to shift from after-the-fact risk assessment to forward-looking risk forecasting. Instead of merely analyzing past failures or defaults, AI models trained on both historical and real-time data can now alert risk teams to anomalies that precede failures, defaults, or cyber intrusions. Rolls-Royce, for example, uses predictive maintenance algorithms on engine telemetry to avoid costly failures and reduce downtime. In financial services, leading firms are harnessing ML models to assess customer behavior and economic trends, spotting credit risk weeks earlier than traditional methods allow.

According to EY’s 2024 Integrity Report, 66% of respondents recognize the need to update compliance programs to accommodate AI’s expanding role, and 47% believe their organizations need to create specific policies for AI-related risks like privacy or fraud. However, while 54% say they are using or planning to use AI in the next two years, only 40% have formal measures in place to govern its deployment responsibly. PMI-RMP’s Risk Strategy & Planning and Monitoring & Reporting domains help bridge this gap. It ensures forecasts and AI-derived insights are tied into structured, auditable risk practices rather than ad hoc reactions.

Automation and Anomaly Detection: Speed and Precision

Manual reviews of logs or spreadsheets cannot keep pace with modern transaction volumes or evolving fraud tactics. AI-driven anomaly detection systems can now scan millions of events in real time, spotting subtle irregularities that humans might miss. A research paper reflects this advantage: after integrating AI-based fraud monitoring, its investigation time dropped dramatically, and similar gains are documented elsewhere. According to a study, AI techniques such as anomaly detection and neural networks increase fraud detection accuracy by up to 25–30% compared with traditional rule-based systems and significantly reduce false positives, which have long burdened fraud teams. The study further notes that AI enables real-time detection and response mechanisms that traditional methods cannot match, essential in mobile banking environments where fraud schemes evolve quickly.

Automated dashboards that continuously update key risk indicators free risk managers to focus on higher-level analysis and strategic planning. Under PMI-RMP’s Risk Process Facilitation and Governance competencies, professionals can set meaningful thresholds, validate AI outputs, and ensure that automated monitoring retains human oversight so speed and precision are balanced with accountability.

Decision Intelligence: Smarter, Explainable Decisions

AI platforms now bring together data from various systems, run rapid simulations, and provide real-time suggestions. A study in Developing a Framework for AI-Driven Optimization of Supply Chains in the Energy Sector (Onukwulu et al., 2023) found that AI can predict equipment failures early, forecast demand changes, and optimize logistics. These abilities reduce unplanned outages and make energy supply chains faster and more efficient.

Decision intelligence offers clear benefits, but it also demands transparency. Organizations must ensure AI-driven recommendations are easy to explain and audit. PMI-RMP-certified professionals utilize their Stakeholder Engagement, monitoring, and reporting skills to transform complex AI outputs into clear, actionable insights. This builds trust with executives, regulators, and audit teams while also improving response times.

ESG Risk Modeling: Managing Sustainability Challenges

Environmental, Social, and Governance risks are notoriously complex, and AI provides the analytical power to make sense of them. Machine learning can correlate NOAA climate data, shipping routes, and supplier performance to predict supply chain disruptions caused by extreme weather. At the same time, natural-language processing monitors social media sentiment for early signs of reputational damage.

PwC’s 2025 ESG Pulse reports that 49 percent of large enterprises now use AI analytics for ESG scenario planning. By applying PMI-RMP’s Risk Governance and Strategy & Planning principles, organizations can integrate these ESG insights into enterprise-level risk appetites rather than leaving them siloed within sustainability teams.

Regulatory and Ethical Guardrails: Governing AI Itself

AI’s speed and complexity can easily outrun existing regulations, making governance essential. The Bank for International Settlements (BIS) warns that opaque AI models can create systemic risks if their decisions are not transparent or well-validated. In 2025, BIS reported that a major financial institution was fined for deploying an AI credit model that produced biased loan approvals. That’s an example of how weak oversight can quickly escalate into legal and reputational damage.

PMI-RMP-certified professionals are trained to prevent such failures. They establish clear governance frameworks, regularly validate AI models, and maintain thorough documentation. By embedding these controls, they ensure AI tools meet compliance standards and ethical expectations, turning potential vulnerabilities into a source of competitive strength.

AI-Powered Risk Management Use Cases

Artificial intelligence is not only reshaping theoretical frameworks, it is also producing measurable results in diverse industries. By examining finance, cybersecurity, supply chain operations, and regulatory compliance, we can see how AI’s practical applications are redefining risk management. These examples also highlight the strategic value that PMI-RMP-certified professionals bring.

Financial Risk Management: Credit, Market, and Fraud Risk

Financial institutions are at the forefront of AI adoption for risk. Banks increasingly use AI-driven credit models that incorporate not just traditional scores but also alternative data, such as payment histories and demographic trends, to refine lending decisions. A 2025 BIS report noted that such models have lowered default rates by improving predictive accuracy and reducing bias.

| JPMorgan Chase’s AI engines scan millions of transactions in milliseconds to flag suspicious activity, allowing human investigators to intervene before significant losses occur. |

PMI-RMP competencies in Risk Governance and Monitoring & Reporting ensure these AI outputs are validated, properly documented, and aligned with enterprise risk appetites rather than accepted blindly.

Cybersecurity and IT Risk

Modern cyber threats change too quickly for manual defenses. AI-powered cybersecurity tools learn what “normal” network traffic looks like. They spot unusual patterns that can signal a breach, malware, or insider activity. FS-ISAC’s Navigating Cyber 2024 report warns that threat actors now use generative AI to scale phishing, social engineering, and automated attacks. Beyond detection, AI helps forecast equipment failures and system outages. It flags anomalies early, so teams can act before services are disrupted. Risk-management leaders stress building AI alerts into structured workflows and validating models often. This ensures AI strengthens defenses instead of becoming a single point of failure.

Supply Chain and Operational Risk

Global supply chains remain vulnerable to disruptions from extreme weather to geopolitical instability, and AI offers the predictive capability needed to stay ahead. Companies now analyze live shipping data, satellite imagery, and climate forecasts to anticipate bottlenecks. AI also supports predictive maintenance in manufacturing plants, where algorithms analyze sensor data to forecast equipment breakdowns. PMI-RMP’s Risk Strategy & Planning and Governance competencies guide professionals in embedding these insights into enterprise-wide risk strategies, ensuring contingency plans are pre-approved and actionable rather than reactive.

Regulatory Compliance and Reporting

Compliance teams face an avalanche of changing regulations. AI-driven natural language processing systems now scan communications, transactions, and policies to flag potential violations or gaps in real time. A multinational bank reported in PwC’s 2025 Compliance Trends Survey that AI tools reduced its compliance review workload by nearly 40% without sacrificing accuracy. These systems can also interpret new regulatory texts, comparing them with existing controls to highlight discrepancies, performing a gap analysis in hours instead of weeks. PMI-RMP-certified professionals play a critical role here by maintaining robust Governance frameworks, ensuring that AI outputs are explainable and documented for regulators, and integrating ethical AI practices into overall compliance strategies.

Preparing for an AI-Powered Risk Future: Why PMI-RMP Matters

With AI becoming integral to risk management, professionals need a new blend of technical awareness and strong foundational risk skills. This is where the Project Management Institute’s Risk Management Professional (PMI-RMP) certification comes into play. PMI-RMP is designed to ensure that risk managers are equipped to handle both traditional risk practices and the emerging challenges of AI-driven environments.

Here’s why PMI-RMP matters more than ever in the age of AI:

Strengthening Risk Governance and AI Oversight

Implementing AI in risk management isn’t just a technical endeavor, it requires robust governance to ensure these powerful tools are used responsibly and effectively. PMI-RMP training emphasizes the establishment of clear risk governance frameworks and processes, which is directly applicable to overseeing AI initiatives.

Professionals with the PMI-RMP certification understand how to define roles, responsibilities, and policies for effective risk management within an organization. They can leverage this knowledge to contribute to AI governance policies that dictate how AI models are developed, validated, deployed, and monitored in alignment with enterprise risk appetites and regulations. Strong governance is essential: according to a PMI report, lacking an AI governance plan can lead to loss of trust among stakeholders and increased security and ethical risks.

By applying risk governance principles, PMI-RMP holders help their organizations avoid these pitfalls, ensuring there is accountability for AI-driven decisions and that AI use aligns with overall business objectives and compliance needs. In essence, PMI-RMP professionals serve as a bridge between technical teams and leadership, translating governance requirements into practice so that AI becomes a trusted aide in risk management rather than a source of new risks.

Gaining Stakeholder Buy-In and Trust for AI Initiatives

Any transformation in risk processes, especially one involving AI, will only succeed if stakeholders support it. One of the competencies PMI-RMP instills is effective stakeholder engagement and communication in the context of risk. Risk managers must often persuade executives, department heads, and front-line teams to embrace new risk strategies or tools. In an AI-powered environment, this means getting buy-in for deploying AI solutions and integrating them into decision workflows.

PMI-RMP-certified professionals are skilled at articulating risk information in business terms and addressing stakeholder concerns. They can explain, for example, how an AI-based predictive analytics tool will reduce the likelihood of project cost overruns or how an anomaly detection system will safeguard customer data in language that resonates with each stakeholder group. This ability to communicate the value and purpose of AI in risk management helps build confidence and reduces resistance to change. It is especially important given that fear of the unknown is common with AI adoption; some team members worry AI might replace their jobs or make errors.

By proactively managing these perceptions and setting expectations (another area covered by PMI-RMP’s focus on stakeholder engagement), risk leaders can foster a culture that views AI as a collaborative tool. In fact, studies show that without clear communication and governance, team members may resist AI, hampering its adoption. PMI-RMP professionals mitigate this by ensuring transparency around AI’s role and maintaining human oversight (“human in the loop” approaches) so that stakeholders trust and welcome AI-enhanced risk processes.

Mastering Model Risk Management and Ethical AI

While AI brings powerful predictive capabilities, it also introduces model risk, the risk that the models themselves are wrong, biased, or misused. In sectors like finance, regulators have long required rigorous model risk management (MRM) practices, and these are now being extended to AI and machine learning models.

PMI-RMP certification prepares professionals to identify and assess all types of risks, including the risks inherent in using AI models for decision-making. With this background, PMI-RMPs are well-suited to implement MRM practices for AI. They know how to set up proper validation and review cycles for risk models, ensuring that AI outputs are regularly tested against reality and that any model limitations are understood.

This skill set is increasingly critical: global regulators stress that limited explainability of AI models poses significant challenges for managing model risk, and they call for strong governance, documentation, validation, and monitoring around AI models. In practice, a PMI-RMP professional might help develop guidelines for how an AI risk model is approved for use (e.g. requiring back testing results, performance benchmarks, and periodic re-validation). They also consider contingency plans if a model fails or produces implausible results, ensuring the organization isn’t blindly dependent on automation.

Equally important is the focus on ethical AI and bias mitigation, which falls under both model risk and governance. AI systems can inadvertently reflect or amplify biases present in data, leading to unfair or dangerous outcomes, a clear risk to any organization. Through the lens of PMI-RMP’s risk identification and mitigation techniques, professionals can help implement checks for bias and fairness in AI models.

This might involve establishing policies that sensitive attributes (like race or gender) are not unjustifiably influencing risk decisions, and setting up independent audits of AI algorithms. Many institutions now use controls such as ethics committees, bias testing, and feature transparency to avoid discriminatory outcomes.

A PMI-RMP’s holistic risk perspective ensures these measures are not seen as mere compliance, but as integral to preventing reputational and legal risks. In summary, PMI-RMP holders bring a disciplined approach to managing the risks associated with AI, validating that models are reliable and fair, and that their adoption does not introduce new vulnerabilities.

Ensuring Explainable and Transparent AI Decisions

Another area where certified risk professionals add value is in championing explainable AI (XAI). In risk management, especially in regulated industries like banking, insurance, or healthcare, being able to explain how an AI arrived at a recommendation is crucial for trust and compliance. PMI-RMP emphasizes clear documentation, auditability, and communication of risk information. Those principles directly apply to AI systems: risk managers need to ensure that humans can understand AI-driven insights, whether it’s internal stakeholders or external regulators.

This is not just a best practice; it’s increasingly a regulatory requirement in many jurisdictions that automated decisions be explainable. With their background, PMI-RMP professionals are equipped to work with data science teams to demand and interpret explainability reports for instance, understanding which factors most influenced an AI model’s risk score for a project, or why the AI flagged a particular transaction as high-risk. They can then translate these technical explanations into layman’s terms for decision-makers.

Why does this matter? Because explainability drives acceptance. If a board or a risk committee can see a clear rationale for an AI-generated risk alert.

Conclusion

Artificial intelligence is undoubtedly the future of risk management. From predictive analytics for risk forecasting to AI-enabled anomaly detection and real-time dashboards, these technologies are helping organizations stay one step ahead of threats. They enable faster decisions, richer insights, and more agile responses than traditional methods ever could. However, maximizing the benefits of AI in risk management isn’t just about technology; it requires skilled professionals who can integrate these tools into sound risk frameworks.

This is why PMI-RMP certification matters in the AI era. PMI-RMP equips risk practitioners with a solid foundation in risk principles and the leadership skills to drive AI initiatives responsibly. Certified risk managers will be the ones who ensure AI is aligned with organizational strategy, that it operates under proper governance, and that its outputs are trusted and actionable.